Aslanna wrote: ↑March 29, 2023, 12:57 pm

Is there an AI for sound/music generation?

I saw one voice AI that used you're voice to sing in a different language. You could take a foreign language (to you) song and then use it to make it sound like it was your voice singing the song in the other language.

I can't remember where I heard it but they were talking about creating song, mixing styles of songs like a prompt. "write a love song using trance and country combined"

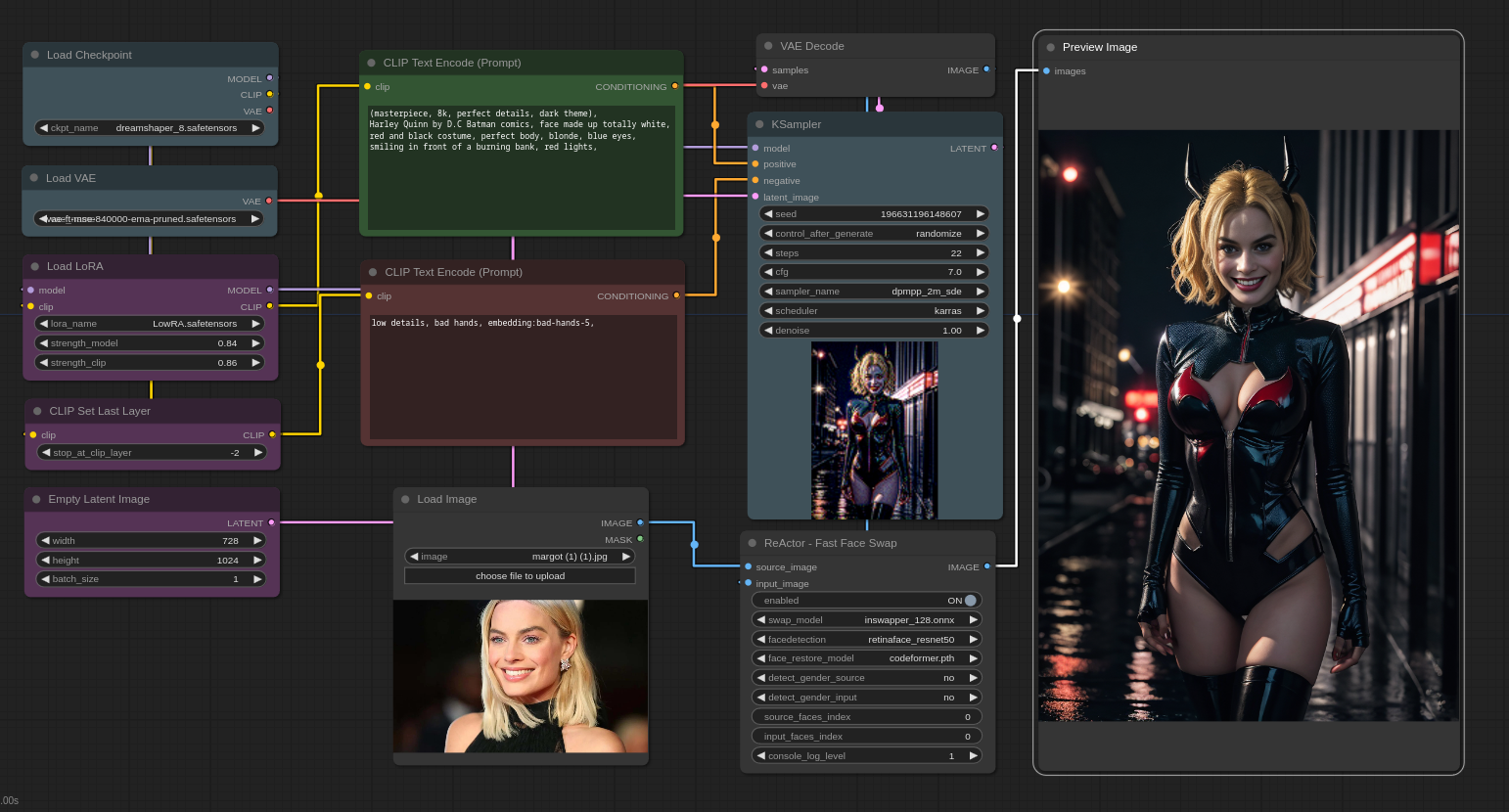

As for AI Art, Stable Diffusion is still really impressive. First, I recommend using Automatic1111 as the GUI for stable diffusion. Easy Diffusion might be easiest to setup but Automatic1111 is where 90% of people are and where all the extensions and support are as well. Easy Diffusion is still working on adding Lora etc and allowing better upscalers etc so it might be good in the future but you need that functionality for anything serious. Easiest way to install Automatic1111 is through GitHub but I did see a stand alone installer and also if you're good with command lines, there are instructions for that as well. Make sure you choose to add PATH when installing Python. That seems to be the number one thing that screws people up. These things work on linux etc so not your normal install process.

As an example of the wide variety of outputs, some person used a single prompt asking for "1 goblin in a cell" and ran it through 79 different stable diffusion models and this is what he got"

go to that URL to see all the images, but it looks like this forum as an imgur plugin because i can see and scroll the images embedded in this post. Interesting.

Couple things regarding the above example:

Each set of four images you see are from the same model. You can see right from the very first image how different asking even the same model for the same prompt can be when generating images.

Basically there are several settings when you generate an image:

Model: this is the main file that's trained on thousands of images and as you can see, depending on how it's trained, the results are quite different.

CFG Scale: this adjusts how strictly the image adheres to the prompts instructions. 7-8 is the default, lower the number for stricter prompt results, a higher number gets you more random results (which can be interesting)

Sampling Steps: Sampling steps determine the detail and how many "passes" the generated image gets. Depending on the "sampling method", see next desc, something like "Euler" only needs 20 steps to create an image, but you can use a more advanced sampler like "DPM++ SDE Karras" which give better results and usually needs more steps, like 40 to create the image. One trick to save time on generating images until you find one you want to tweak is to generate a batch of like 6 Eular images, then when you find one you like, you send that image from text2image to image2image processing. You can then take that image and make smaller generated modifications to it while at the same time using a better sampler that will increase the quality of the image and then after that you can use upscalers to refined the sharpness and resolution even more. Using this method you can generate a lot of images in a short time before spending long cycles on the image you want to keep and perfect.

Sampling Method: Eular, DDIM, DPM etc. as with those 79 model images you see above, they are also influenced by the sample, some better at photo realistic images, others better for art, anime, etc. What's crazy is those 79 sets of 4 images in the link, are all most likely based on a single sampler, changing the sampler has a huge impact on the end result as well.

Denoising Strength: used in image2image, once you have the image you like and send it to image2image (there's also inpaint but will discuss that in a sec) you want to start narrowing down the changes in the image as you generate more versions. so the lower the denoise setting the less the image will change and vice versa. So if you like the basic concept (how the character is positioned and the background, you use a lower denoise, if you want more change, like a bottle currently sitting onto a table randomly morphing into a lamp for example, you use higher denoise...but also keep in mind you still are using CFG, so keeping that low will make less changes and higher will do more intense things like even swap a gender of a person etc.

Image Size: when first generating an image, most of the default sizes are 512x512. Usually you switch between 512x768 and 768x512 depending if you want a landscape or portrait (which again has huge impact on content of the image) most models are trains on 512x512 images so that size (allowing for portrait/landscape) is what you want to use to start with. Keep in mind, all the amazing AI images you see start out this size, they just get upscaled during the refinement stages.

Seed: This is important. Once you generate an image, a random seed is used 59872975 (for example) if you like the image and want to generate more similar images to it, you can lock that seed and your images will change but keep same basic layout. Normally when you start you keep it on "random" which randomized the entire AI image while using the prompts, model, sampler, etc as guides.

When you go to a model/lora/checkpoint etc site like

CivitAI you will see the authors' examples of what they created. if you look in the bottom right of the images on CivitAI you will see a "i", click on that and it tells you the exact prompts/negative prompts, base model, CFG scale, sample and seed they used to get the end result of that image. You can easily take that in info and create a similar (not exact) image to what you see as long as you use the same settings/model/sample/seed etc

Lora: Lora are trained specifically on a particular face/body, or object (like clothing style, car etc). So when you see all those random images above, if you insert into the prompt <lora:BradPitt:1.2> when the image it generated, it will use Brad Pitts likeness (as example you download the lora's from CivitAI, they are small files ranging from 9mp to 140mb.

Now say you also want your goblin, besides looking like BradPitt to be in a Jedi Robe, if there is a lora for that (or embedding style also works) you would use something like this with your prompts <lora:BradPitt:1.2> <lora:JediRobe:0.9> the number at the end is how much the AI is impacted in using that lora, the higher the more likely it appears in the image (ranges from like (0.1 to 1.5) if there is no jedi robe lora, you can take 50-100 images of jedi's in robes and train your own lora and then apply a jedi robe to anyone you want in the future. (it's a pain to figure out the training settings to begin with but once you do, you're off to the races and every other thing you train will be easy)

here's an example of

a Star Wars Rebel Pilot suit/helmet

So while you can see how kind of cool just a basic prompt of "1 goblin in a cell" can be an so many interesting results, you can easily modify that result with something like

prompt:

1 goblin in a wearing a collar, sitting in a cell with straw on the ground, rats, dungeon, ultra detailed, volumetric lighting, by Frank Frazetta

Negative prompt:

lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, bad hands, ugly hands, undetailed, deformed hands, deformed bodies, extra limbs

In the above example., you'd probably see some form of straw on the ground, a rat or two (maybe, just keep generating if you dont!) the lighting is important...so many types of lighting

lighting examples

Volumetric

Dramatic, Cinematic, Studio, Chiaroscuro,glowing,neon,holographic,bioluminescent,radiance,glistening,polished,satin, silken, reflective, mirrored, frosted, glittering, sparkling, shimmering, deeppled, filtered, backlit, silhouetted, highlighted, low-light, spotlight, floodlight, rim light, shadowed, contrasted,moon lighting

...and then can instantly switch from various styles, too many to list, but like oil panting, airbrush, pastel, photo realistic, 3D, hundreds or thousands of art, anime, etc styles.

You don't need to use artists but they of course have a huge influence on the painting/illustration/photo style. You can even combint multiple artists. Frank Frzetta and Boris Vallejo were rival artists with totally different styles if you use prompt by "Frank Frazetta and Boris Vallejo" the AI would blend those two styles. I'm notg sure is Boris is actuall one of th artist but Julie Bell who has similar style. Im not saying use those, there are 20,000 artists that were sampled....

Amazingly....with something like 20K artists it's already impressive but you can train your own.

Training:

You can train checkpoints, loras, etc. Lora are the most popular and easy to use to modify models and you'll see an ass-ton of them on CivitAI.

People are training practically every artist you find on sites like Deviant Art, etc. If you don't find your favorite, you can make you're own.

ALL OF THIS IS FREE

So lucky AI is open source. Stable Diffusion is out there and once you have it, it can't be taken away. Also, the the base model SD 1.5 is still used more than newer 2.0 and 2.1 models because it didn't weed out a lot of porn poses scanned so creating that using SD 1.5 is what you'll see used mostly on CivitAI because so far there's been no compelling reason for people to change to newer base models, even if based off higher resolution because the 1.5 can be upscaled anyway.

As with all new tech, AI art is driven by porn so you'll see a lot of pretty women, etc on sites like CivitAI. Keep in mind, the adult industry drives all visual innovation (VR, VHS, etc). Don't let it diminish the amazing things you can do with AI art. People just happen to like to see whoever they want sucking a cock or being sucked, etc sometimes. With AI now that's trivially easy to do.

Getting past the adult stuff. As long as you have 10-15 decent quality pictures of anyone you can create a very good model. That said, my younger pictures (70's and 80's) aren't that great in resolution etc. You can use software like Gigapixel AI to drastically improve a picture, sharpen the edges etc before using them to train though. I'm still going to try and find some images of my dad and create a model and maybe insert an age 30's-ish version of him into our family xmas card or something.

Oh also, if you use png as your output, all the prompt, model, cfg, seed etc settings are stored in the image. So you can go back to an image (or one someone else created) and drag that photo to the PNG tab on Automatic1111 and it will extract all the data (model, cfg, seed, scaler etc) and you can send it straight to image2image, text2image, inpaint, etc (download the models from CivitAi if you don't already have it) and start modifying without having to start from scratch.

This is still in it's infancy but even over the past 6 months it's grown by leaps and bounds. If you don't feel like doing the work locally, you can use online stable diffusion based sites like Midjourney, Playground AI, etc...there are a bunch, some with free limited number of images you can generate. Midjourney is the most popular but they cut off free trial due to so many deepfakes being made : ) (the Pope in a White jacket and Trump being arrested are recent popular fakes) If you have a decent GPU I'd recommend doing it yourself.

One of my favorite artists is Masamune Shirow (Lot of NSF stuff don't google at work). He's got extremely stylized/recognizable work. Someone scanned a few thousands of his pictures...now a couple things regarding this. Before you train an image, you run the images through a captioning process, for anime type stuff you use WD14 which uses the Danbooru archives to generate prompts, again dont go there from work but if you go to that site and choose any image, you'll see a ton of descriptive terms describing that image that can then be searched for "blue hair, looking at viewer, earrings, etc...along with adult descriptions as well) when you start training, those captions are embedded into your lora so when you choose "earrings" it finds the scans that had that description in them and has a better chance of using those in the generated image.

Anyway, because of the way that works, they included in a text file/folders all the artbooks with the prompts for each picture, now you cant EXACTLY reproduce the artbooks but come damn close when you generate using the prompts. It's amazing how similar it looks....and of course also, you can create anything you want in that style. not just being similar to the original pictures. So If I wanted to make my own artbook of Masamune Shirow with a theme of being on the moon in a futuristic city, i would include those prompts and then mess around with pose prompts, "sitting on a thrown" "drinking from a glass", tiger laying down, full body, wearing tight green space suit" I can make my own themed art and it looks incredibly good (the style compared to the original artist) and I can generate 100s of images all different instead of be limited to the 20-30 you normally see in an art book. It really is amazing.

Also, a note, for all the the high quality anime art, you use a specific engine and VAE (I didnt even talk about VAEs, yet another big factor in how images look). the model is called NAI and it was leaked from a company so that one you need to track down (pretty easy to do)

tldr: AI art is amazing. It sucks if you're an artist because it will impact you, just like if you're in jobs like customer service etc, ChatGPT etc are coming for your jobs soon...not something to be mad about, I mean what happened to all the horse people when cars arrived? They went away. It's going to happen, just need to deal with it.